NSUF Article

From atoms to earthquakes to Mars: High performance computing

By Cory Hatch, INL Communications

Researchers solving today’s most important and complex energy challenges can’t always conduct real-world experiments.

This is especially true for nuclear energy research. Considerations such as cost, safety and limited resources can often make laboratory tests impractical. In some cases, the facility or capability necessary to conduct a proper experiment doesn’t exist.

At Idaho National Laboratory, computational scientists use INL’s supercomputers to perform “virtual experiments” to accomplish research that couldn’t be done by conventional means. While supercomputing can’t replace traditional experiments, supercomputing is an essential component of all modern scientific discoveries and advancements.

“Science is like a three-leg stool,” said Eric Whiting,

director of Advanced Scientific Computing at INL. “One leg is theory, one is

experiment, and the third is modeling and simulation. You cannot have modern

scientific achievements without modeling and simulation.”

INL has three

supercomputers, Sawtooth, Lemhi and Hoodoo.

HIGH DEMAND RESOURCES

INL’s High Performance Computing program has been in high demand for years. From INL’s first supercomputer in 1993 to the addition of the Sawtooth supercomputer in 2020, the demand for high performance computing has only increased.

Sawtooth and INL’s other supercomputers are flexible enough to tackle a wide range of modeling and simulation challenges and are especially suitable for dynamic and adaptive applications, like those used in nuclear energy research. INL’s supercomputers are one of the Nuclear Science User Facilities’ 50 partner facilities and its only supercomputers.

Whether it’s exploring the effects of radiation on nuclear

fuel or designing nuclear-powered rockets for a trip to Mars, INL’s High

Performance Computing center is the Swiss Army knife of advanced computing.

THE POWER OF 100,000 LAPTOPS

On a recent tour of the Collaborative Computing Center, Whiting

led the way through the rows of Sawtooth processors. Each row looked like dozens

of tall black refrigerators standing side by side. The room hummed with the pumping

of thousands of gallons of water needed to keep Sawtooth cool.

Sawtooth is INL’s

newest supercomputer, consisting of 2079 compute nodes each of which has 48

cores and 192 GB of memory.

Sawtooth contains the computing power of about 100,000 processors all dedicated to very large, high fidelity problems, which means orders of magnitude more processing power and memory when compared to a traditional laptop computer.

All that computing power allows researchers from around the world to run dozens of complex simulations at the same time. “If your program is designed right, it runs thousands of times faster than the best-case scenario on your desktop,” Whiting said.

Some of these simulations — modeling the performance of fuel inside an advanced reactor core, for instance — require the computer to solve millions or billions of unknowns repeatedly.

“If you have a multidimensional problem in space, and then you add time to it, it greatly adds to the size of the problem,” said Cody Permann, a computer scientist who oversees one of the laboratory’s modeling and simulation capabilities. Modeling and simulation started decades ago by solving simplified problems in one or two dimensions. Modern supercomputers, like INL’s Sawtooth, significantly increased the accuracy of these simulations, bringing them closer to reality.

To solve these complicated problems, researchers break down each

simulation into thousands upon thousands of smaller units, each impacting the units

surrounding it. The more units, the more detailed the simulation, and the more

powerful the computer needed to run it.

THE ATOMIC EFFECTS OF RADIATION ON MATERIALS

For Chao Jiang, a distinguished staff scientist at INL, a highly detailed simulation means peering down to the level of individual atoms.

Jiang’s simulations, funded by the Department of Energy Nuclear Energy Advanced Modeling and Simulation program

and the Basic Energy Sciences program, help nuclear scientists understand the

behavior of materials when their atoms are constantly knocked around by

neutrons in a reactor core. These displaced atoms will create defects, changing

the microstructure of the material, and therefore its physical and mechanical

characteristics. These changes in microstructure can damage the materials and

reduce the lifetime of the reactor. Understanding these changes helps

scientists design better and safer reactors.

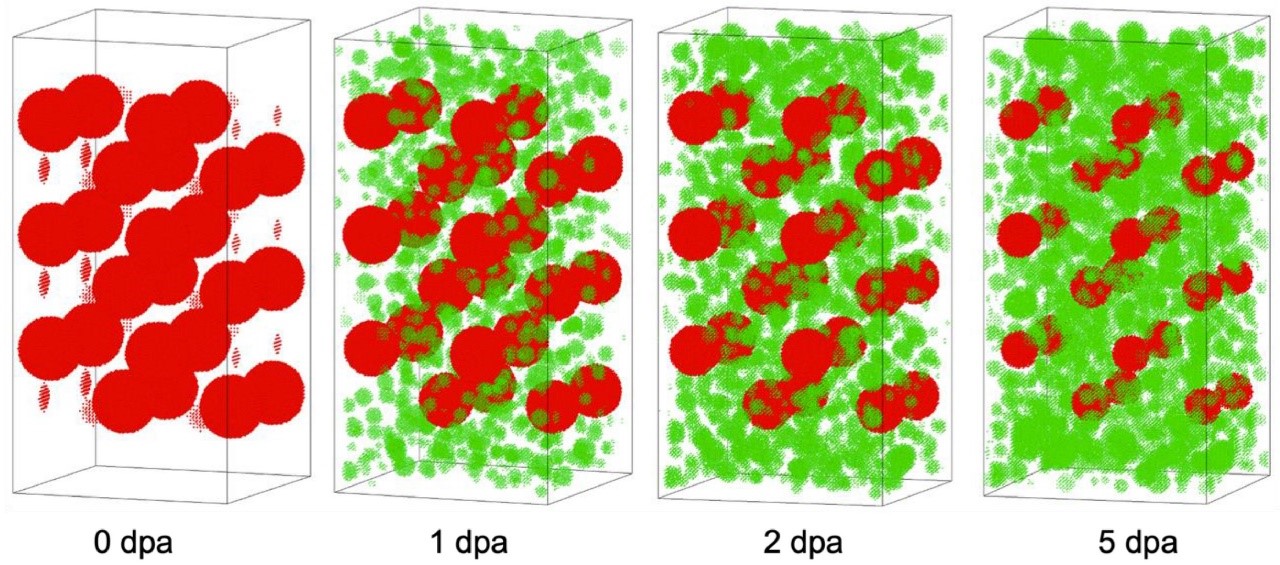

This visualization shows the

development of krypton bubbles (green) in chromium with pre-existing void

superlattices (red) when exposed to krypton ion irradiation at 250°C

(482°F).

“The work we are doing is extremely challenging,” Jiang said. “They are computer-hungry projects. We are big users of the high performance computers.”

Understanding the radiation damage in materials is difficult. This change involves physical processes that occur across vastly different time and length scales. “When the high energy neutrons hit the material,” Jiang said, “it will locally melt the material.”

Heating and cooling inside an operating reactor takes place in picoseconds, or one trillionth of a second. During this heating and cooling, the material will re-solidify, but will leave defects behind, Jiang said. “These residual defects will migrate and accumulate to form large-scale defects in the long run.”

While large defects, such as dislocation loops and voids, can

be directly seen using advanced microscopy techniques, there are many small-scale

defects that remain invisible under microscope. These small defects can significantly

impact the materials, making the use of computer simulations to fill this

knowledge gap critical. INL computational scientists combine their simulations

with the advanced characterization techniques performed by material scientists

at INL’s Materials and Fuels Complex to advance the understanding of material

behavior in a nuclear reactor.

SIMULATING THE IMPACTS OF EARTHQUAKES ON REACTOR

MATERIALS

Another INL scientist, Chandu Bolisetti, also simulates the damage to materials, but on a much different scale.

Bolisetti, who leads the lab’s Facility Risk Group, uses high performance computing to simulate the effects of seismic waves — the shaking that results from an earthquake — on energy infrastructure such as nuclear power plants or dams.

In early 2021, funded by the DOE Office of Technology Transitions, Bolisetti and his colleagues performed a particularly complex type of simulation — they simulated the impacts of seismic waves on a nuclear power plant building that houses a molten salt reactor.

A molten salt reactor is a particularly difficult physics

problem because the coolant/fuel circulates through the reactor in liquid form.

The team also placed their hypothetical reactor on seismic isolators, giant

shock absorbers that help reduce the impacts of earthquakes on buildings.

MASTODON is a

MOOSE-based modeling and simulation code used to model how earthquakes can

affect nuclear power plants.

Bolisetti’s team ran the simulation using MOOSE, which stands for Multiphysics Object Oriented Simulation Environment, a software framework that allows researchers to develop modeling and simulation tools for solving multiphysics problems. For these earthquake simulation problems, Bolisetti’s team uses MASTODON, which they developed using MOOSE specifically for seismic analysis.

Another project funded by INL’s Laboratory Directed Research and Development program looks at how a molten salt reactor behaves in an earthquake in much more detail. It extends the analysis to include neutronics and thermal hydraulics — in other words, how the shaking impacts nuclear fission and the distribution of heat in the reactor core.

“All three of these physics — earthquake response, thermal hydraulics and neutronics — are pretty complicated,” Bolisetti said. “No one has ever combined these into one simulation. How the power in the reactor fluctuates during an earthquake is important for safety protocols. It affects what the operators would do during an earthquake and helps us understand the core physics and design safer reactors.”

“Real-world experiments to simulate this are close to

impossible, especially when you add neutronics,” Bolisetti said. “That’s where

these kinds of multi-physics simulations really shine.”

SIMULATING NUCLEAR ROCKETS FOR A TRIP TO MARS

Mark DeHart, a senior reactor physicist at INL, uses MOOSE to simulate an entirely different kind of complex machine: a thermonuclear rocket that could someday take humans to Mars.

The rocket would use hydrogen as both a propellant and a coolant. When the rocket is in use, hydrogen would run from storage tanks through the reactor core. The reactor would rapidly heat the hydrogen before it exits the rocket nozzles.

“The hydrogen that comes out is pure thrust,” DeHart said.

Compared with chemical rockets, thermonuclear rockets are faster and twice as efficient. The rockets could cut travel time to Mars in half.

One big challenge is rapidly heating the reactor core from about 26 degrees Celsius (80 degrees Fahrenheit) to nearly 2,760 Celsius (5,000 Fahrenheit) without damaging the reactor or the fuel.

DeHart and his colleagues are using Griffin, a MOOSE-based advanced reactor physics tool, for multiphysics modeling of two aspects of the NASA mission.

The first project tests the fuel’s performance as it experiences rapid heating in the reactor core. The real-world fuel samples are placed in INL’s Transient Test Reactor (TREAT) where they are rapidly brought up to temperature.

The data from those experiments are used to create and validate models of the fuel’s neutronics and heat transfer characteristics using Griffin.

“If we can show that Griffin can model this real-world sample correctly, we can have confidence that Griffin can calculate correctly something that doesn’t exist yet,” DeHart said.

The second project is designing the rocket engines themselves. Automated controllers rotate drums in the reactor core to bring the temperature up and down. “We’ve developed a simulation that will show how you can use the control drums to bring the reactor from cold to nearly 5,000 F within 30 seconds,” DeHart said.

Without high performance computing and MOOSE, developing a

thermonuclear rocket would take dozens of small experiments costing hundreds of

millions of dollars.

AN OPPORTUNITY FOR COLLABORATION

In the end, high performance computing makes INL a gathering place for researchers with a wide range of expertise, from rocket design to artificial intelligence. About half the system’s users are from national labs, with a quarter coming from universities and a quarter from industry. The resulting collaborations are especially important for nuclear energy research.

“INL cannot attract all the experts in our field, but by sharing a computer, INL’s team can work with 1,200 experts across the United States,” Whiting said. “INL’s supercomputers are helping build the expertise and develop the tools so they can deploy next-generation reactors.”

And the demand for these modeling and simulation resources is only growing. Sawtooth added more than four times the capacity to INL’s high performance computing capabilities, and already the line of projects waiting in the queue can reach into the thousands.

“We need years of research with the High Performance Computing

facility,” said Jiang. “We need to understand the high energy state of nuclear

materials as accurately as possible, so we need to explore a huge space.

Without high performance computing, basic energy research would suffer. It’s

critical.”

Articles

About Us

The Nuclear Science User Facilities (NSUF) is the U.S. Department of Energy Office of Nuclear Energy's only designated nuclear energy user facility. Through peer-reviewed proposal processes, the NSUF provides researchers access to neutron, ion, and gamma irradiations, post-irradiation examination and beamline capabilities at Idaho National Laboratory and a diverse mix of university, national laboratory and industry partner institutions.

Privacy and Accessibility · Vulnerability Disclosure Program